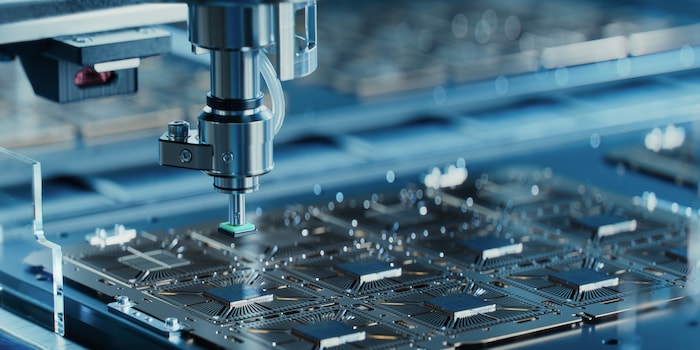

Start-up combines CPU and GPU in the same core

Classic systems have separate CPU and GPU cores. The company X-Silicon claims to have developed a processor architecture in which this is no longer necessary.

The start-up X-Silicon from San Diego claims to have developed a microprocessor whose core is a CPU and GPU in one. It integrates graphics acceleration into a RISC-V CPU core - the result is a C-GPU. The architecture is said to be more efficient than separate cores, as known from previous processors from Intel or AMD. The first potential areas of application are virtual reality, cars and Internet of Things devices.

According to X-Silicon, the new chip can handle all kinds of tasks, including calculations for artificial intelligence (AI), high-performance computing, geometry computing as well as 2D and 3D graphics. According to the portal Jon Peddle Research, the industry has long been looking for just such a flexible chip that can be easily scaled.

More efficiency, no licence fees

In theory, the C-GPU offers decisive advantages over the classic architecture. It requires less intermediate memory and is more efficient, as no data needs to be copied back and forth between the CPU and GPU. Chip manufacturers can scale performance as required with multiple cores. In such a design, the individual cores are connected via a high-bandwidth interface. They can perform CPU and GPU tasks independently of each other - depending on what is needed at the time.

X-Silicon says that the Vulkan graphics interface is already running on the chips. As the design is based on the open standard of RISC-V, it can be used freely by manufacturers. Unlike x86 or ARM, where licence fees are payable. This should make it even more attractive. We will soon see how well it works in practice: X-Silicon plans to release the first developer kits this year.

My fingerprint often changes so drastically that my MacBook doesn't recognise it anymore. The reason? If I'm not clinging to a monitor or camera, I'm probably clinging to a rockface by the tips of my fingers.

From the latest iPhone to the return of 80s fashion. The editorial team will help you make sense of it all.

Show all