I tested Apple Intelligence – here’s how it fared

Apple’s rolled out the new version of iOS, iPadOS and macOS. Its biggest innovation is Apple Intelligence – at least in the English version. Here’s my verdict on the new AI feature.

Back in June, Apple announced its new artificial intelligence (AI) feature to great fanfare. Following the new OS update, it’s now available (in English only). I gave it a whirl.

Which devices support Apple Intelligence?

Not every Apple device is equipped with the AI feature. Only more recent devices support it:

- iPad Pro, 3rd Generation (M1) or newer

- iPad mini, 7th Generation (A17 Pro)

- iPhone 15 Pro, 15 Pro Max and all versions of the iPhone 16

- MacBook Air Late 2020 (M1) or newer

- MacBook Pro Late 2020 (M1) or newer

- iMac (2021) (M1) or newer

- Mac Studio (2022) (M1) or newer

- Mac Mini Late 2020 (M1) or newer

Heads-up for anyone who uses their devices in another language: Apple Intelligence currently only works in English. A rollout for other languages, including German, Italian and French, is planned for April 2025. Only then will Apple Intelligence also be available in the EU. In Switzerland, it already works.

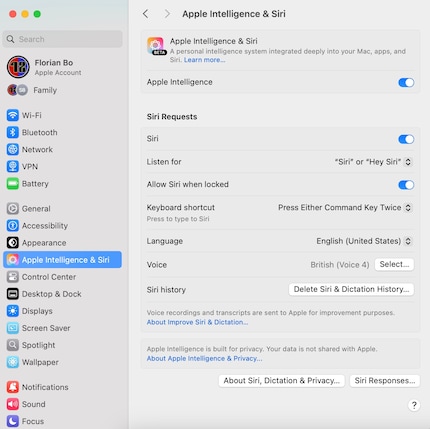

How do I set up Apple Intelligence?

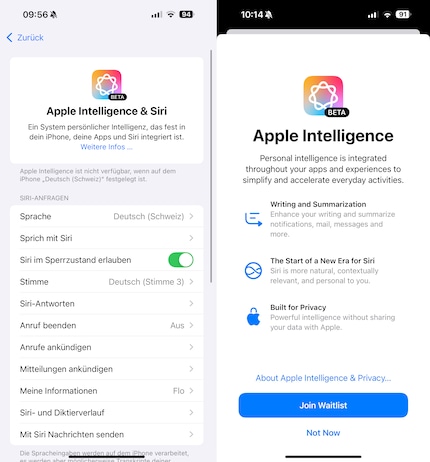

If you want to use Apple Intelligence, you’ll have to update your operating system. If you currently use your device in a language other than English, you’ll then need to change your system language to English (US), then restart your device. After that, you’ll find the Apple Intelligence & Siri option under Settings. The first time you access it, you’ll also see the Set up Apple Intelligence option.

Source: Florian Bodoky

In Settings, tap Apple Intelligence & Siri, then Join Waitlist. After waiting a few minutes, you’ll be taken through a straightforward setup.

Source: Florian Bodoky

Siri can chat away to you in a variety of different accents, and in some versions, there are several voices to choose from. English (UK) Voice 4 has a trusty Cockney accent. That’s the one I decide to go for.

What can Apple Intelligence do right now?

Apple’s launching Apple Intelligence’s features in phases. Not everything it presented in June is actually available yet. Here are the functions you can already use:

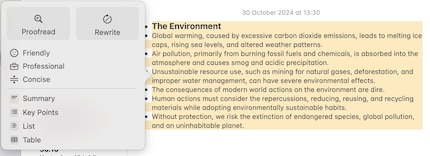

Writing Tools

Apple Intelligence offers a range of tools to make it easier for you to write and understand text. If you’ve written something in the Notes app, for instance, you can highlight it and have it checked for typos in Writing Tools using the Proofread function. So far, so unspectacular – but it’s reliable and adaptive. A more interesting function is the Rewrite tool, which redrafts what you’ve written. It doesn’t make major changes to short pieces of text; the AI gets rid of unnecessary filler words and sometimes uses synonyms. It’s particularly nifty if English isn’t your first language. Rewrite also recognises contexts. When I tested the feature, it didn’t come up with any weirdly constructed sentences – unlike Grammarly when it first came out.

Source: Florian Bodoky

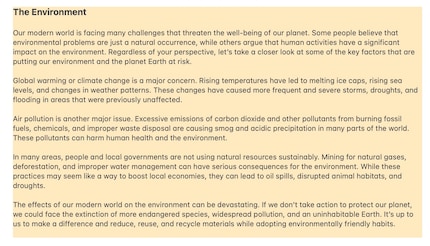

The third feature within Writing Tools is the ability to change the tone of what you’re writing. If you’re composing an e-mail, for example, you can instruct the AI to rewrite the text in a style of your choosing: Friendly, Professional or Concise. While Friendly uses flowery phrasing and decorative polish to make your prose shine, Professional cuts all the waffle and strikes a matter-of-fact tone. Suitable for a document such as a slide deck, Concise rids the text of any entertaining or showy elements. That said, Apple Intelligence does a good job in this mode too.

Source: Florian Bodoky

Finally, you can use Apple Intelligence to summarise what you’ve written. Whether you’re simply cutting down the length of a document, creating tables or summing up key facts as bullet points, it can do it all.

Source: Florian Bodoky

You can switch between the original and the pimped-up versions of your draft at the touch of button. That way, you can compare and decide which one you like better. All these features are easy to use and produce solid results when I test them.

Photos app

The new AI also has its uses in the Photos app. Take the Clean Up feature, Apple’s answer to Google’s Magic Eraser, for example. You can ask the app to recognise unwanted elements in a photo and remove them. It then generates content to fill the gaps, similar to the way Adobe Photoshop works. The success of the feature kind of depends on the AI being able to recognise these unwanted elements itself. Sometimes it does – but it doesn’t always. When it doesn’t work, you have to circle the thing you want to remove. But if you’re not precise, you won’t be able to rely on the AI.

Source: Florian Bodoky

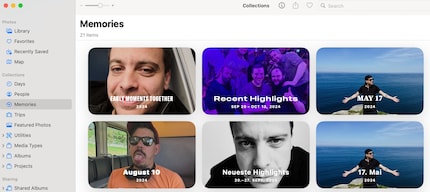

There’s also the Movie Memories function. If you go to the Photos app and scroll down to Memories, you’ll see the Create button. Bear in mind that depending on the size of your photo archive, it might take a few days for this to work if you’ve just set up Apple Intelligence. The images have to be processed first. To do this, connect your iPhone to Wi-Fi.

Once you’ve done that, you’ll be able to use prompts to instruct Apple Intelligence to create a video – or rather, a slideshow of several images. An example prompt could be something like «create a memory movie out of my holiday in London and use punk music in the background».

Source: Florian Bodoky

The search function in Photos has also been improved. The iPhone now understands relatively complex search commands. Instead of simply searching «dog» or «beer» (which, in my case, produces a jumble of search results), you can be more specific, going for something like «dog next to a lake» or «dark-haired person with a beer». You can also search for a specific moment within a video. Apple calls this function Natural Language search.

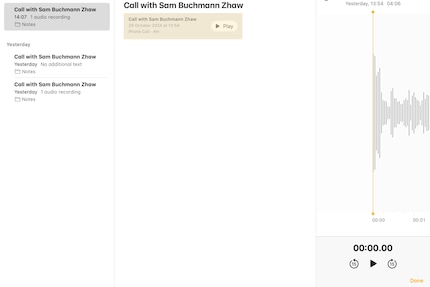

Record, transcribe and summarise phone calls

If you want to make sure you remember all the important details mentioned during a phone call, it can be helpful to take notes. At least if you’re someone like me, who can’t listen and write simultaneously. By tapping an icon during a phone call, you can now record your conversation. When the feature’s activated, both people on the call are notified by a recorded voice message. That being said, the voice was pretty quiet during my call with fellow editor Samuel. If you happen to speak over it loudly – unintentionally or otherwise – the other person might not catch the message.

Source: Florian Bodoky

When I try it out, the call recording feature works well. My iPhone creates an audio file at the end of the conversation. However, despite our best, Swiss-accented attempts at speaking English, the automatic transcript’s nowhere to be found. As a result, there’s no option to summarise the conversation. I’ve no idea why.

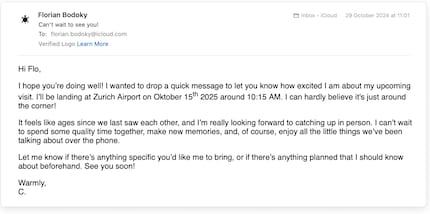

Mail and Messages app features

The Summarise function in Apple’s Mail and Messages apps is really handy. If you receive an e-mail, for example, the preview window doesn’t show you the beginning of the message. Instead, the AI searches for relevant details within the message and displays it in the preview. That way, you know whether you need to open the e-mail at all.

Source: Florian Bodoky

Source: Florian Bodoky

The AI does the same thing in Messages. It’s just a shame that hardly anyone I know uses the app. Besides 2FA codes and order confirmations from my pizza delivery service, I hardly get any messages on there.

Source: Florian Bodoky

The function covers notifications in all your apps. This means that if you receive notifications from various apps, the AI summarises what they’re about.

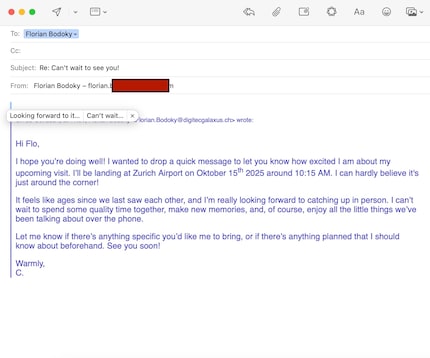

Smart replies

If you get, say, an e-mail, and want to reply to it, the AI will suggest possible pre-written responses. Outlook and other services have been able to do this for a long time. However, Apple’s AI automatically references things mentioned in the original e-mail, such as arrival times or important tasks.

Source: Florian Bodoky

Sorting e-mails and messages

The AI can also sort your e-mails by priority. If you get dozens of messages every day, Apple Intelligence bumps the most important ones to the top. Ones that contain a deadline, for example. Personally, this feature’s not for me. I’d be too worried that an e-mail that’s important to me would slip through the cracks. With this in mind, I’ll be keeping my inbox in chronological order.

Siri’s got a little smarter

Siri’s the most long-standing voice assistant, but unfortunately, it’s also the dumbest. Alexa and Google Assistant surpassed Siri a long time ago. But that’s about to change. Siri can now hold longer conversations with connecting parts. If you have a question or a task in mind, you can mention it in a later voice command and Siri will recognise the context.

Plus, there’s a Type to Siri option so you can control the voice assistant by text. If you ask me, this is really convenient. Let’s face it, even after all these years, speaking to Siri in public still seems weird. Siri can make appointments for you, finish e-mails and more. The assistant also has a stash of explanations on how to set things up on the iPhone. This is useful – even if my Cockney version does respond to «Hey Siri» with a less than enthusiastic «Hmm?».

What’s keeping us waiting a little longer

Many AI features are still to come. One example is Visual Intelligence, the Apple equivalent of Google Lens. This allows you to do things like translate text you’ve seen on a poster or start a web search based on an object you’ve photographed.

Image Playground, Apple’s AI-based image generator, will launch at the end of the year. It’s similar to the Genmoji feature, which generates its own emojis using prompts. Image Wand has been created in a similar vein. For example, if you draw a sketch in the Notes app, Image Wand can convert it into a real image. This feature’s set to be released with iOS 18.2 in December. At the same time, the AI’s scheduled to be integrated with ChatGPT.

In April 2025, Siri’s also set to get another upgrade. Based on the so-called on-screen awareness principle, the assistant will be able to draw on users’ personal context. This means it’ll recognise what you’re currently doing on your phone and offer support related to it. At the same time, Apple Intelligence will also be made available in other languages, including German, Italian and French.

I've been tinkering with digital networks ever since I found out how to activate both telephone channels on the ISDN card for greater bandwidth. As for the analogue variety, I've been doing that since I learned to talk. Though Winterthur is my adoptive home city, my heart still bleeds red and blue.